Bin picking: a well-known issue

A team of researchers at Fraunhofer IPA has been working on teaching robots to pick things up for several years now. Automating the process of “bin picking” is considered a core problem in robotics. Many areas of industrial manufacturing generate large volumes of bulk items that need to be sorted and separated as accurately as possible. It is a monotonous, physically demanding, high-cost task, which makes it a perfect candidate to be assigned to a robot instead. But it is also a huge challenge for robots: The bulk of the industrial robots currently used for these jobs utilize laser scanning to be able to at most tell previously learned objects apart.

Artificial intelligence attempts to mimic human cognitive abilities by recognizing and sorting incoming information like Homo sapiens does. However, that doesn’t mean it identifies how to solve the problem. In this machine learning method, the algorithm develops a way to execute tasks correctly, but only as part of a simulation. Through training with very large volumes of data, “neural networks” — a subdiscipline of machine learning – can recognize patterns and connections and use them as a basis for making decisions and predictions. And that means they improve over time.

Machine learning can also help deal with unknown objects. In the Deep Grasping research project, neural networks were trained in a virtual simulation environment with the aim of recognizing objects and then transferred to real-world robots. The robot system is now even able to recognize components that are hooked together and plan its grasping motions so it can unhook them. The researchers are also working on automation systems that configure themselves, for example through automatic selection of grippers and gripping poses “automation of automation”, so to speak.

Picking things out of a bin and setting them down somewhere else might sound underwhelming in light of the hopes raised by Optimus and similar robots. But these deceptively small jobs represent huge advances in robotics. Kraus points to what is known in the field as Moravec’s paradox: Tasks that seem incredibly simple to us as humans are actually extremely difficult for robots. Or, as Canadian researcher Hans Moravec put it, “It is comparatively easy to make computers exhibit adult level performance on intelligence tests or playing checkers, and difficult or impossible to give them the skills of a one-year-old when it comes to perception and mobility.”

The goal of incorporating AI into robotics is to help overcome these challenges, and this is viewed as one of the groundbreaking trends in the digital transformation of industrial manufacturing. Market research firm Mordor Intelligence, for example, forecasts an annual growth rate of 29 percent for the robotics market between now and 2029. Smart industrial robots can enhance production speed, accuracy, and security, facilitate troubleshooting, and make production more resilient through predictive maintenance.

To support the industrial sector on the way to Industry 5.0, Fraunhofer IPA teamed up with the Fraunhofer Institute for Industrial Engineering IAO in Stuttgart in 2019 to establish the AI Innovation Center “Learning Systems and Cognitive Robotics”, an applied branch of Cyber Valley, Europe’s biggest research consortium in the field of AI. The goal is to conduct practical research projects as a way to bridge the gap between the technologies involved in cutting-edge AI research and SMEs.

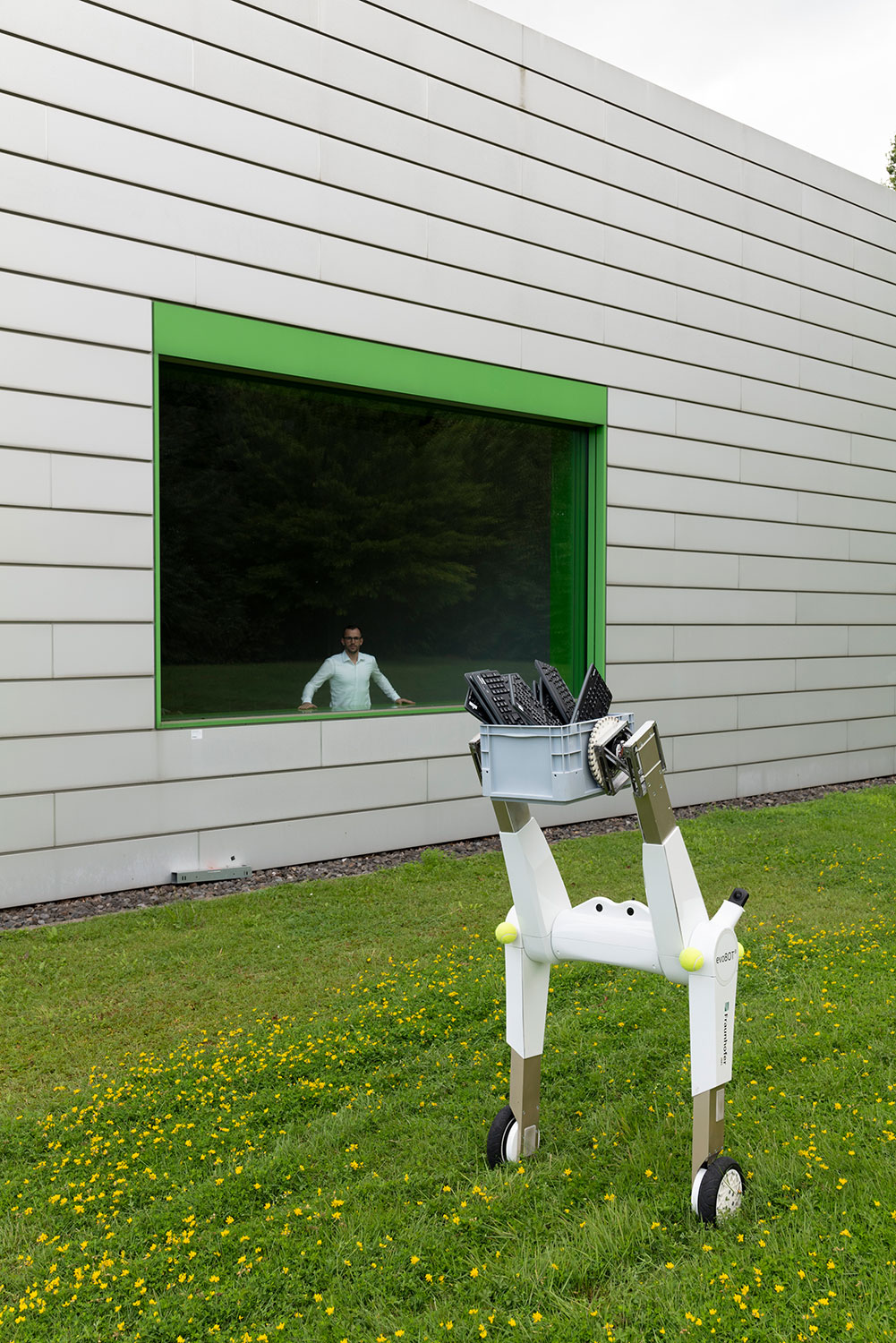

In Magdeburg, the Fraunhofer Institute for Factory Operation and Automation IFF has partnered with companies to create use case labs where the manufacturing sector can present its automation requirements and devise customized smart robotics solutions. The cutting-edge Lamarr Institute, one of five university AI competence centers across Germany to receive ongoing funding as part of the German federal government’s AI strategy, is designing a new generation of artificial intelligence that will be powerful, sustainable, trustworthy and secure and contribute to resolving key challenges in industry and society. The Fraunhofer Institute for Material Flow and Logistics IML is contributing in various ways, including with its PACE Lab research infrastructure.

This past July also saw the launch of the Robotics Institute Germany (RIG), which is to become a central point of contact for all aspects of robotics in Germany. The competence network is led by the Technical University of Munich (TUM) and has received 20 million euros in funding from the German Federal Ministry of Education and Research (BMBF). Three Fraunhofer institutes — IPA, IML, and the Fraunhofer Institute for Optronics, System Technologies and Image Exploitation IOSB — are all involved. The goals of the RIG are to establish internationally competitive research on AI-based robotics in Germany, use research resources effectively, provide targeted support for talent in the field of robotics, and simplify and advance the transfer of research findings to industry, logistics companies, and the service sector.